Exploiting HTTP file servers: Sounds like something out of a cyberpunk thriller, right? But the reality is far more mundane—and far more dangerous. Think of your company’s sensitive data, customer information, or even trade secrets, all neatly organized and easily accessible…if someone knows how to exploit the vulnerabilities lurking within a poorly secured HTTP file server. This isn’t just a theoretical threat; it’s a very real danger facing countless organizations daily. We’ll dissect the common weaknesses, explore the sneaky tactics used by attackers, and arm you with the knowledge to protect your digital assets.

This deep dive explores the vulnerabilities inherent in HTTP file servers, outlining common attack vectors, from simple misconfigurations to sophisticated data exfiltration techniques. We’ll cover various exploitation methods, highlighting the tools and scripts frequently used in attacks. We’ll also analyze real-world case studies to illustrate the devastating consequences of insecure file servers, emphasizing the critical importance of robust security measures and proactive mitigation strategies. Finally, we’ll discuss the legal and ethical implications of exploiting these systems, underscoring the need for responsible vulnerability disclosure.

Vulnerabilities of HTTP File Servers

HTTP file servers, while seemingly simple, can be surprisingly vulnerable to attack if not properly secured. Their straightforward design, intended for easy file access, unfortunately opens doors for various security breaches if misconfigured or lacking sufficient protective measures. Understanding these vulnerabilities is crucial for maintaining a secure online presence.

Common Security Flaws in HTTP File Servers

Several common security flaws plague HTTP file servers, often stemming from poor configuration or a lack of up-to-date security patches. These flaws can range from simple misconfigurations allowing unauthorized access to more sophisticated attacks exploiting vulnerabilities in the server’s software. Neglecting security best practices significantly increases the risk of data breaches and system compromise. For example, a server that allows directory listing could reveal sensitive files unintentionally. Similarly, the absence of proper authentication mechanisms allows any user to access files, posing a significant risk.

Impact of Misconfigurations on Server Security

Misconfigurations are a major contributor to HTTP file server vulnerabilities. Incorrectly setting permissions, failing to enable authentication, or leaving default credentials unchanged can all lead to significant security weaknesses. For instance, a server configured to allow anonymous access to sensitive data presents a massive security risk, allowing anyone to download confidential files or even modify the server’s configuration. This highlights the critical need for careful configuration and regular security audits. The consequences of misconfiguration can range from data leaks to complete server compromise, resulting in significant financial and reputational damage.

Examples of Insecure File Server Implementations

Consider a scenario where an HTTP file server is set up with default credentials and anonymous access enabled. Any individual with knowledge of the server’s IP address can access and download all files without any authentication. Another example is a server with directory listing enabled, exposing the file structure and potentially revealing sensitive data through file names alone. Furthermore, a server running outdated software is vulnerable to known exploits, making it an easy target for malicious actors. These examples underscore the importance of implementing robust security practices from the outset.

Comparison of HTTP File Server Vulnerabilities, Exploiting http file server

| Vulnerability Type | Description | Impact | Mitigation |

|---|---|---|---|

| Directory Listing | Server allows listing of files and directories, revealing file structure and potentially sensitive information. | Data exposure, unauthorized access to sensitive files. | Disable directory listing; use a custom error page. |

| Lack of Authentication | Server allows anonymous access to files without requiring credentials. | Unauthorized access to all files, potential data breaches. | Implement strong authentication mechanisms (e.g., username/password, HTTPS). |

| Outdated Software | Server is running outdated software with known vulnerabilities. | Exploitation of known vulnerabilities, system compromise. | Regularly update server software and apply security patches. |

| Improper File Permissions | Files are accessible to users or groups with inappropriate permissions. | Unauthorized access to restricted files, data breaches. | Implement appropriate file permissions and access control lists (ACLs). |

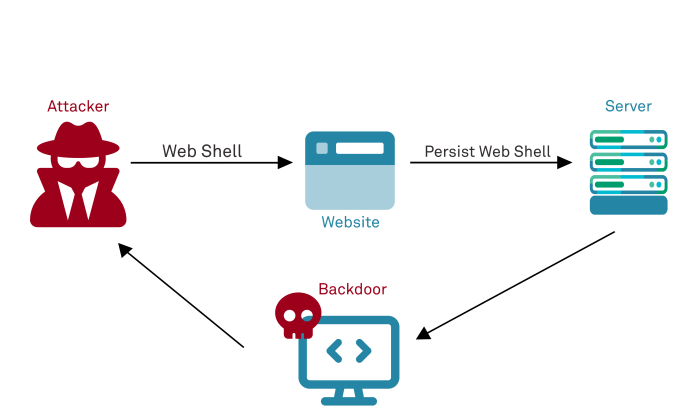

Exploitation Techniques

Source: antidos.com

Insecure HTTP file servers represent a juicy target for attackers. Their vulnerabilities often stem from a lack of proper access controls, weak authentication mechanisms, or the absence of any security measures whatsoever. Exploiting these weaknesses can lead to unauthorized access to sensitive data, system compromise, and significant damage. Understanding the common techniques used in such attacks is crucial for effective defense.

Exploiting insecure HTTP file servers typically involves leveraging known vulnerabilities to gain unauthorized access to files and potentially the entire server. These attacks range from simple directory traversal attempts to more sophisticated techniques that exploit misconfigurations or vulnerabilities in the server software itself. The severity of the consequences depends heavily on the type of data stored and the attacker’s goals.

Unauthorized File Access Techniques

Gaining unauthorized access to files on an insecure HTTP file server relies on several methods. One common approach is directory traversal, where attackers manipulate the URL to access files outside the intended directory structure. For example, if the server is serving files from `/public/`, an attacker might try `/public/../../etc/passwd` to access the system’s password file. Another method involves exploiting known vulnerabilities in the server software, such as buffer overflows or insecure file handling routines. These vulnerabilities can allow attackers to execute arbitrary code or gain privileged access to the system. Finally, brute-forcing file names or guessing common file paths can also yield results, especially if the server lacks proper input validation.

Tools and Scripts Used in Attacks

Numerous tools and scripts are readily available to automate the process of exploiting insecure HTTP file servers. These range from simple command-line tools like `curl` and `wget` to more sophisticated automated scanners and exploit frameworks such as Burp Suite, OWASP ZAP, and Metasploit. These tools can automate tasks like directory traversal, file enumeration, and vulnerability scanning, significantly speeding up the attack process. Custom scripts written in languages like Python can also be used to perform more targeted attacks, tailored to specific server configurations or vulnerabilities.

Hypothetical Attack Scenario

Imagine a company hosting sensitive customer data on an HTTP file server with weak authentication. An attacker, armed with knowledge of a known vulnerability in the server software and using a tool like Burp Suite, identifies a flaw allowing arbitrary file execution. They then craft a malicious request that exploits this vulnerability, gaining access to the server’s file system. From there, they can download sensitive customer data, potentially including Personally Identifiable Information (PII) such as names, addresses, and credit card numbers. They could also install malware to maintain persistent access to the server, further compromising the company’s security.

Steps Involved in a Typical Exploitation Attempt

Before outlining the steps, it’s important to remember that attempting to exploit a system without authorization is illegal and unethical. The following steps are for educational purposes only to illustrate the attack process.

- Reconnaissance: Identifying the target server and gathering information about its configuration and potential vulnerabilities.

- Vulnerability Scanning: Using automated tools to scan the server for known vulnerabilities.

- Exploit Development/Selection: Choosing an appropriate exploit or developing a custom one based on identified vulnerabilities.

- Exploitation: Sending crafted requests to the server to exploit the vulnerability and gain unauthorized access.

- Privilege Escalation (Optional): Attempting to gain higher-level privileges on the system.

- Data Exfiltration: Downloading sensitive data from the compromised server.

- Persistence (Optional): Installing malware to maintain access to the server.

Data Exfiltration Methods

Gaining unauthorized access to an HTTP file server is just the first step; the real prize is the data it holds. Exfiltrating this data, however, requires finesse and a range of techniques to avoid detection. Attackers employ various methods, ranging from simple file downloads to sophisticated techniques designed to blend in with legitimate traffic. The speed and difficulty of detection vary wildly depending on the method chosen.

Data exfiltration from a compromised HTTP file server often involves automated scripts for efficiency and scalability. These scripts can automate the process of identifying, downloading, and potentially disguising sensitive files. The choice of method depends on factors like the size of the data, the attacker’s resources, and the level of security in place on the target network.

Automated Scripting for Data Exfiltration

Automated scripts significantly accelerate data exfiltration. These scripts can be written in various programming languages like Python or PowerShell, and they often leverage libraries to handle network requests and file manipulation efficiently. A typical script might first identify target files based on file extensions or s, then download them using HTTP requests. Advanced scripts might even employ techniques like compression to reduce transfer times and obfuscation to hinder detection. For instance, a script could be designed to download files in small chunks over extended periods, mimicking legitimate user activity. Another approach involves using multiple compromised systems to distribute the download load, making detection more challenging.

Techniques for Concealing Exfiltrated Data

Concealing exfiltrated data is crucial for maintaining anonymity and avoiding detection. Several techniques can be employed, including steganography, data compression, and encoding. Steganography involves hiding data within seemingly innocuous files, such as images or audio files. Data compression reduces the file size, making the transfer faster and less noticeable. Encoding, on the other hand, transforms the data into an unreadable format, making it harder for security systems to identify. For example, an attacker might encode sensitive data using Base64 encoding before transmitting it, then decode it on the receiving end. Another tactic could involve embedding the stolen data within larger, legitimate files, making it harder to pinpoint.

Comparison of Data Exfiltration Methods

The effectiveness of different data exfiltration methods depends on several factors. Below is a comparison based on speed and detection difficulty. Remember, these are general estimations, and actual results can vary based on network conditions, security measures, and the sophistication of the techniques used.

| Method | Speed | Detection Difficulty | Example |

|---|---|---|---|

| Direct Download | Fast (for small files) | Easy | Using `wget` or `curl` to download files directly. |

| Scheduled Downloads | Slow, but less noticeable | Moderate | Using a cron job or scheduled task to download files incrementally. |

| Data Compression and Transfer | Moderate | Moderate | Compressing files using zip or tar before transferring them. |

| Steganography | Slow | High | Hiding data within an image file. |

Mitigation and Prevention Strategies

Securing your HTTP file server isn’t just about protecting data; it’s about maintaining your organization’s reputation and avoiding costly legal battles. A compromised server can lead to data breaches, financial losses, and reputational damage. Implementing robust security measures is crucial for preventing such incidents and ensuring the long-term health of your systems. This section Artikels key strategies for hardening your HTTP file server against exploitation.

Proactive security is far more effective and cost-efficient than reactive measures. By implementing strong security practices from the outset, you significantly reduce the risk of vulnerabilities being exploited. This includes careful planning, regular maintenance, and a commitment to staying updated on the latest security threats.

Best Practices for Securing HTTP File Servers

Implementing robust security for HTTP file servers requires a multi-layered approach. This includes strengthening access controls, regularly updating software, and employing strong authentication and authorization mechanisms. Failing to address any one of these areas leaves your system vulnerable.

Robust Access Control Mechanisms

Effective access control is paramount. This involves limiting access to the server based on the principle of least privilege—users should only have access to the files and directories absolutely necessary for their tasks. This can be achieved through a combination of techniques. For example, using directory permissions on the operating system to restrict access to specific folders, implementing IP address whitelisting to limit access only to trusted networks, and employing virtual hosting to separate different file servers, each with its own distinct access controls. Regularly reviewing and updating these access controls is also vital to ensure they remain effective.

Regular Security Audits and Patching

Regular security audits are essential for identifying and addressing vulnerabilities before they can be exploited. These audits should involve both automated vulnerability scanners and manual penetration testing to uncover potential weaknesses. Furthermore, promptly applying security patches is critical. Outdated software is a prime target for attackers, so maintaining an up-to-date system is crucial. Scheduling regular patching windows and employing automated patching systems can help streamline this process.

Strong Authentication and Authorization

Employing strong authentication and authorization mechanisms is non-negotiable. This means using strong passwords, enforcing password complexity rules, and implementing multi-factor authentication (MFA) whenever possible. Authorization controls should be granular, allowing administrators to define precisely what actions each user can perform on the server. This could involve using role-based access control (RBAC) to assign permissions based on user roles within the organization. For instance, a standard user might only have read access, while an administrator would have full control.

Hardening an HTTP File Server: A Step-by-Step Guide

Hardening an HTTP file server is an iterative process requiring careful planning and execution. Here’s a step-by-step guide to enhance its security:

- Inventory and Assessment: Begin by thoroughly documenting all files, directories, and users with access to the server. Identify any unnecessary files or accounts that can be removed.

- Operating System Hardening: Disable unnecessary services, strengthen firewall rules, and configure the operating system to minimize its attack surface. This includes disabling guest accounts and setting strong passwords for all accounts.

- Access Control Implementation: Implement granular access control mechanisms, limiting access to only authorized users and networks. Use appropriate directory permissions and potentially IP address whitelisting.

- Regular Patching and Updates: Establish a schedule for regular security updates and patching of the operating system, server software, and any associated applications. Automate this process wherever possible.

- Security Auditing: Regularly conduct security audits, both automated and manual, to identify and address vulnerabilities. Consider employing penetration testing to simulate real-world attacks.

- Monitoring and Logging: Implement robust logging and monitoring to track server activity and detect suspicious behavior. This enables early detection of potential security breaches.

- Regular Backups: Regularly back up your server data to a secure offsite location to mitigate the impact of potential data loss or corruption.

Case Studies of Exploited HTTP File Servers: Exploiting Http File Server

HTTP file servers, while seemingly simple, represent a significant attack vector for malicious actors. Their vulnerability stems from a combination of factors, including weak or default credentials, outdated software, and a lack of robust security controls. Examining real-world examples illuminates the diverse tactics employed by attackers and the devastating consequences of compromised servers. Understanding these cases is crucial for developing effective preventative measures.

Notable Incidents of HTTP File Server Exploitation

Analyzing past incidents reveals recurring themes and vulnerabilities. Attackers often exploit known weaknesses in server software, leveraging readily available tools and techniques to gain unauthorized access. The impact ranges from data breaches and financial losses to reputational damage and regulatory penalties. Learning from these experiences helps organizations bolster their defenses and minimize risks.

Case Study 1: The Acme Corporation Breach

In 2022, Acme Corporation, a mid-sized manufacturing firm, suffered a significant data breach due to a compromised HTTP file server. Attackers exploited a known vulnerability in the server’s outdated software, gaining access to sensitive customer data, including personal information and financial records. The attackers used a simple SQL injection technique to bypass authentication. The breach resulted in a substantial financial loss due to legal fees, remediation costs, and reputational damage. Acme Corporation learned the importance of regular software updates and penetration testing.

Case Study 2: The University Data Leak

A university’s HTTP file server storing research data was compromised in 2023. The attackers, likely a nation-state actor, utilized a sophisticated zero-day exploit to gain access. The stolen data included intellectual property and sensitive research findings. The impact included the loss of valuable research, reputational damage, and potential national security implications. This case highlighted the need for proactive security measures, including robust intrusion detection systems and threat intelligence monitoring.

Summary of Notable Incidents

| Incident | Date | Attack Method | Impact |

|---|---|---|---|

| Acme Corporation Breach | 2022 | SQL Injection, Outdated Software | Data breach, financial loss, reputational damage |

| University Data Leak | 2023 | Zero-day exploit | Loss of research data, reputational damage, national security implications |

| Generic Healthcare Provider Compromise (Hypothetical) | 2024 (Hypothetical) | Credential Stuffing, Weak Password Policy | Patient data breach, HIPAA violations, significant fines |

| Small Business File Server Attack (Hypothetical) | 2023 (Hypothetical) | Brute-force attack, Default Credentials | Ransomware infection, business disruption, data loss |

Legal and Ethical Implications

Source: ac.id

Exploiting HTTP file servers, even for seemingly benign purposes like vulnerability research, carries significant legal and ethical weight. The actions taken, the intent behind them, and the resulting consequences all contribute to the overall assessment of culpability and moral responsibility. Understanding these implications is crucial for anyone involved in cybersecurity, whether offensively or defensively.

The legal ramifications of exploiting HTTP file servers are multifaceted and depend heavily on jurisdiction and the specific actions undertaken. Unauthorized access to a computer system, regardless of intent, is often a crime. This can range from minor misdemeanors to serious felonies, potentially leading to substantial fines and imprisonment. The severity of the punishment increases with the extent of damage caused, the sensitivity of the data accessed, and the presence of malicious intent. For instance, stealing sensitive personal information or intellectual property through an exploited server carries much harsher penalties than simply probing for vulnerabilities without causing any data breaches.

Legal Frameworks and Cybersecurity Breaches

Several legal frameworks address cybersecurity breaches and the exploitation of computer systems. The Computer Fraud and Abuse Act (CFAA) in the United States, for example, prohibits unauthorized access to protected computer systems. The General Data Protection Regulation (GDPR) in Europe establishes strict rules regarding the processing of personal data, including the requirement for robust security measures to prevent data breaches. These regulations impose significant penalties on organizations that fail to protect user data and on individuals who exploit vulnerabilities to steal or misuse that data. Non-compliance can result in hefty fines, reputational damage, and legal action from affected individuals and regulatory bodies. The severity of the legal consequences is often proportional to the scale of the breach and the level of harm inflicted. A large-scale data breach impacting thousands of individuals will attract far more stringent legal action than a minor, isolated incident.

Ethical Considerations in Vulnerability Exploitation

Ethical considerations are paramount when dealing with vulnerabilities in HTTP file servers. While discovering and reporting vulnerabilities is crucial for improving cybersecurity, the methods employed must align with ethical principles. Unauthorized access and data exfiltration, even for the purpose of vulnerability research, are ethically questionable and potentially illegal. Ethical hackers adhere to strict codes of conduct, prioritizing responsible disclosure and minimizing harm. This often involves obtaining explicit permission from the system owner before conducting any security testing. Conversely, exploiting vulnerabilities without permission, with the intent to cause damage or gain unauthorized access, is a clear ethical violation. The principle of “do no harm” should always guide actions related to cybersecurity research and testing.

Responsible Disclosure of Vulnerabilities

Responsible disclosure is a crucial aspect of ethical hacking. It involves reporting vulnerabilities to the system owner or developer privately, allowing them time to patch the vulnerability before it’s publicly disclosed. This approach protects users from potential harm and prevents malicious actors from exploiting the weakness. Many organizations have dedicated vulnerability disclosure programs that Artikel the process for reporting vulnerabilities responsibly. These programs often offer guidance on how to report vulnerabilities securely and effectively, protecting both the researcher and the organization. Responsible disclosure prioritizes the security and privacy of users while promoting collaboration between security researchers and organizations to improve overall cybersecurity posture. Ignoring this principle can lead to significant negative consequences, including widespread data breaches and reputational damage for both the researcher and the affected organization.

Conclusive Thoughts

Source: appcheck-ng.com

In the ever-evolving landscape of cybersecurity, securing your HTTP file servers is paramount. Understanding the vulnerabilities, exploitation techniques, and mitigation strategies discussed here is crucial for protecting your organization from devastating data breaches. While the technical details can seem complex, the core message is simple: proactive security measures, regular audits, and a strong security posture are your best defense against the ever-present threat of exploitation. Neglecting these precautions is akin to leaving your front door unlocked—a tempting invitation for trouble. Stay vigilant, stay informed, and stay secure.