POC exploit HTTP file server – sounds intense, right? It is. This isn’t just about some tech jargon; it’s about the real-world danger lurking in seemingly harmless file servers. We’ll dissect the architecture of these servers, expose common security flaws, and explore the chillingly effective techniques hackers use to exploit them. Get ready to understand how these attacks work, the devastating consequences they can unleash, and, most importantly, how to protect yourself.

We’ll cover everything from understanding the vulnerabilities inherent in poorly configured HTTP file servers to examining real-world case studies (anonymized, of course!). We’ll also delve into the ethical considerations surrounding proof-of-concept exploits and provide practical, actionable steps to bolster your server’s security. Think of this as your survival guide in the wild west of online security.

Understanding HTTP File Servers and Vulnerabilities

Source: bleepstatic.com

HTTP file servers, at their core, are simple: they serve files over the HTTP protocol. This seemingly straightforward function, however, presents a significant attack surface if not properly secured. Understanding the architecture and common misconfigurations is crucial for preventing exploitation.

HTTP File Server Architecture

A typical HTTP file server consists of several key components. First, there’s the server software itself (e.g., Apache, Nginx), responsible for listening for incoming HTTP requests and serving files based on those requests. This software interacts with the operating system, accessing files from the server’s file system. Crucially, configuration files dictate how the server behaves, specifying things like which directories are accessible, authentication methods, and error handling. Finally, the server’s network configuration determines which IP addresses and ports it listens on, controlling external access. A poorly configured server can expose sensitive data or allow unauthorized access to the entire file system.

Common Security Misconfigurations in HTTP File Servers

Many security issues stem from inadequate configuration. One common problem is granting excessive permissions to the web server process. This allows the server to access more files than necessary, potentially exposing sensitive data or allowing attackers to modify system files. Another frequent oversight is the lack of robust authentication and authorization. Without proper authentication, anyone can access files, while weak authorization might allow users to access files beyond their privileges. Finally, failing to regularly update the server software and its dependencies leaves the server vulnerable to known exploits.

Potential Vulnerabilities Leading to Exploitation

Insecure configurations directly lead to several exploitable vulnerabilities. Directory traversal attacks, for example, allow attackers to access files outside the intended directory by manipulating the requested file path. This could reveal sensitive configuration files or other private data. Another common vulnerability is insecure file uploads, where attackers can upload malicious files (e.g., scripts) that execute on the server, granting them control. Denial-of-service (DoS) attacks are also possible, overloading the server with requests and rendering it unavailable. These attacks often target poorly configured servers lacking rate limiting or other protective mechanisms.

Examples of Insecure HTTP File Server Configurations

Consider a server configured to serve files from the root directory (“/”) without any authentication. This is incredibly insecure, as anyone can access all files on the server. Similarly, allowing file uploads without proper validation is risky. An attacker could upload a malicious PHP script to execute arbitrary code. Failing to implement proper error handling can also leak sensitive information, such as file paths or internal server errors. These errors often contain details helpful to attackers.

Secure vs. Insecure File Server Setups, Poc exploit http file server

| Feature | Insecure Setup | Secure Setup |

|---|---|---|

| Authentication | None or weak password protection | Strong password policies, multi-factor authentication |

| Authorization | No access control, everyone has full access | Fine-grained access control, restricting access to specific files and directories |

| Directory Listing | Enabled, revealing file structure | Disabled, preventing directory traversal attacks |

| File Uploads | No validation or sanitization | Strict validation and sanitization, preventing malicious file execution |

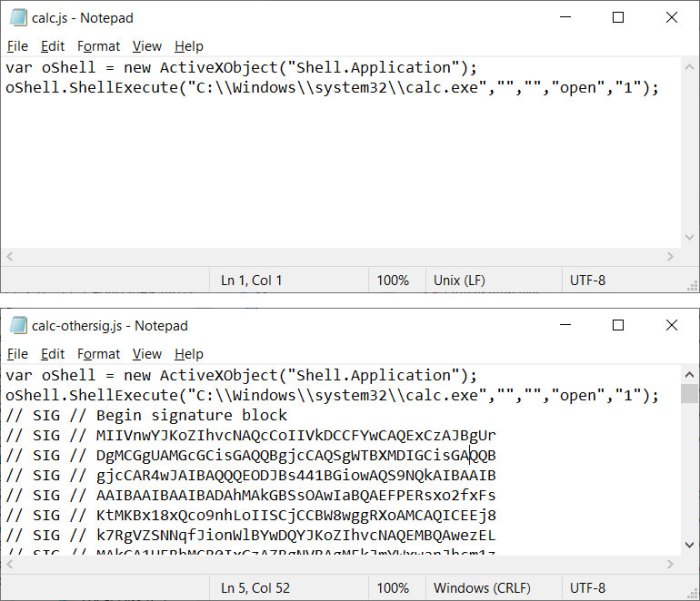

The Nature of “Proof of Concept” Exploits

Source: trustedsec.com

Proof-of-concept (POC) exploits are like the blueprints of a digital heist. They don’t necessarily aim to cause widespread damage, but rather to demonstrate the *potential* for harm. They’re essentially a working example showcasing a vulnerability, proving that a specific attack is feasible. Think of them as a warning sign, highlighting weaknesses that need to be patched before malicious actors exploit them for real-world damage.

A POC exploit’s primary function is to validate the existence and exploitability of a security flaw. Security researchers often create these to alert developers and organizations about vulnerabilities, allowing them to address the issue before it’s weaponized by cybercriminals. However, the very act of creating and sharing POCs presents ethical complexities.

Ethical Implications of POC Exploits

The ethical landscape surrounding POC exploits is a delicate balancing act. While sharing POCs can raise awareness and ultimately improve security, irresponsible disclosure can have serious consequences. For example, releasing a POC before a patch is available could leave numerous systems vulnerable to immediate attack. Responsible disclosure typically involves privately informing the affected vendor and giving them sufficient time to fix the vulnerability before publicly releasing the POC. This ensures a more secure approach, minimizing the potential for widespread harm. The debate often centers on the responsibility of the researcher versus the potential for misuse by malicious actors.

Examples of POC Exploits Targeting HTTP File Servers

Several types of POC exploits can target the vulnerabilities of insecure HTTP file servers. One common example involves exploiting directory traversal flaws. A malicious actor might craft a specially designed request to access files outside the intended directory, potentially revealing sensitive configuration files, source code, or other confidential data. Another vulnerability is improper authentication or authorization, allowing unauthorized access to files if the server lacks robust security measures. A POC might demonstrate this by bypassing login mechanisms or exploiting weak password policies. Finally, certain HTTP file servers might be susceptible to buffer overflow attacks, where a specially crafted request could crash the server or even allow remote code execution. The POC would showcase how this overflow can be triggered and what the consequences might be.

Hypothetical Scenario: Successful POC Exploit

Imagine a small business using an outdated HTTP file server to store customer data. A security researcher discovers a directory traversal vulnerability. They create a POC exploit that, by manipulating the URL, allows access to a file containing customer credit card information. The POC successfully retrieves this data, demonstrating the severity of the vulnerability. This POC is then responsibly disclosed to the business, allowing them to update their server and implement better security practices, preventing potential financial and reputational damage. This scenario highlights how a POC can be a powerful tool for both identifying and mitigating security risks.

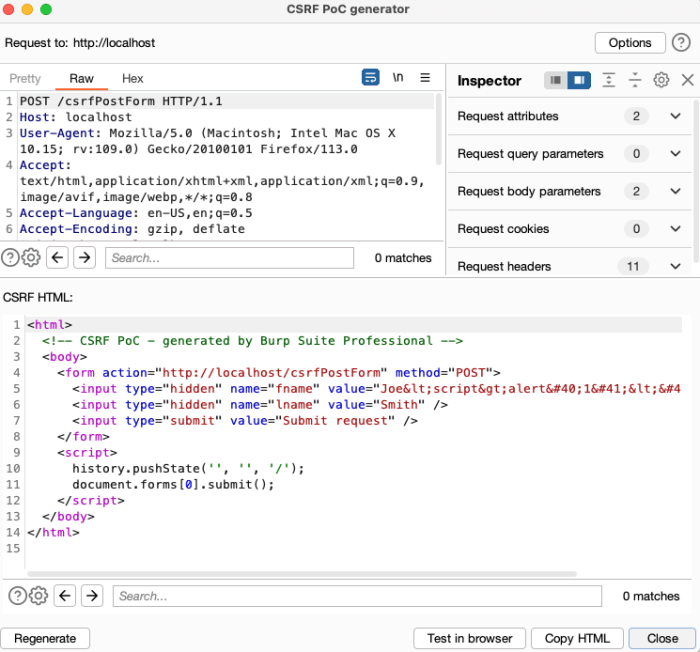

Exploitation Techniques and Vectors

Exploiting vulnerabilities in HTTP file servers often involves manipulating HTTP requests to gain unauthorized access to files or system resources. These attacks leverage weaknesses in server configuration, software flaws, or insecure coding practices. Understanding the common methods and vectors is crucial for both security professionals defending against attacks and ethical hackers conducting penetration testing.

Several techniques are employed to compromise HTTP file servers, ranging from simple directory traversal to sophisticated attacks leveraging buffer overflows or insecure authentication mechanisms. The effectiveness and complexity of these methods vary significantly, depending on the specific vulnerability and the attacker’s skill level.

Directory Traversal

Directory traversal attacks exploit vulnerabilities in how the server handles file paths. By manipulating the requested file path, an attacker can access files and directories outside the intended webroot. For instance, an attacker might try requesting `/../etc/passwd` to access the system’s password file, assuming the server improperly handles the “..” sequences that represent moving up a directory level. This technique is relatively simple to execute, making it a popular choice for attackers. Successful exploitation depends on the server’s lack of proper input sanitization and path validation.

HTTP Request Smuggling

HTTP Request Smuggling involves injecting malformed HTTP requests to confuse the server’s parsing logic. This can lead to unexpected behavior, such as bypassing authentication mechanisms or executing arbitrary commands. A successful attack might involve crafting a request that contains multiple HTTP headers or bodies, causing the server to process them incorrectly. This technique requires a deeper understanding of HTTP protocols and is more complex to implement than directory traversal. For example, an attacker might leverage a vulnerability in how the server handles the `Content-Length` header to smuggle additional data into the request.

Parameter Tampering

Many HTTP file servers use parameters in URLs to specify files. Parameter tampering involves modifying these parameters to gain access to unintended files or functionality. For example, a URL like `/download.php?file=image.jpg` might be modified to `/download.php?file=../../etc/passwd`. Again, this relies on inadequate server-side input validation.

Exploitation Steps

A typical exploitation process often follows these steps:

- Reconnaissance: Identifying the target server and potential vulnerabilities. This might involve using tools like Nmap to scan for open ports and services, and analyzing the server’s HTTP headers and responses for clues about its configuration and software version.

- Vulnerability Assessment: Determining specific vulnerabilities present in the server. This may involve manual testing or using automated vulnerability scanners.

- Exploit Development/Selection: Crafting or selecting an exploit that leverages the identified vulnerability. This may involve writing custom scripts or using publicly available exploit tools.

- Execution: Sending the crafted HTTP request to the server to trigger the vulnerability. This could involve using tools like curl or Burp Suite.

- Post-Exploitation: Accessing and potentially manipulating the compromised system. This might involve retrieving sensitive data, installing malware, or gaining further control.

Analyzing the Impact of Successful Exploits

A successful proof-of-concept (POC) exploit against an HTTP file server can have devastating consequences, ranging from minor inconvenience to a full-blown security crisis. The impact depends heavily on the server’s role within an organization’s infrastructure, the sensitivity of the stored data, and the attacker’s goals. Understanding these potential ramifications is crucial for effective security planning and incident response.

The consequences of a successful exploit extend far beyond the initial compromise. A compromised file server often serves as a springboard for further attacks, allowing attackers to move laterally within a network, exfiltrating sensitive data, installing malware, or disrupting operations. The cascading effect of a single vulnerability can be significant, resulting in substantial financial losses, reputational damage, and legal repercussions.

Data Breaches from Vulnerable File Servers

Vulnerable HTTP file servers have been the source of numerous high-profile data breaches. For example, imagine a scenario where a company’s file server, containing sensitive customer data like credit card numbers and personal identifiers, is compromised due to a known vulnerability. An attacker could easily download this information, leading to identity theft, financial fraud, and significant regulatory fines for the company. The breach could also damage the company’s reputation, leading to a loss of customer trust and potential business failure. Real-world examples of this are plentiful, with many companies suffering substantial losses due to insecure file servers. The sheer volume of sensitive data often stored on these servers makes them a prime target for malicious actors.

Further Attacks Following Initial Compromise

Once an attacker gains access to a file server, they can leverage that access to launch a range of additional attacks. This might involve installing malware to further compromise other systems on the network, creating backdoors for persistent access, or using the server as a launching point for distributed denial-of-service (DDoS) attacks against other targets. They might also modify or delete files, causing significant disruption to business operations. The compromised server becomes a foothold, allowing for deeper penetration and more extensive damage. For instance, an attacker might use the server to launch phishing attacks, sending malicious emails to employees from what appears to be a legitimate internal source.

Leveraging a Compromised File Server for Additional Attacks

A compromised file server can be a powerful tool for an attacker. They could upload malicious scripts or executables, allowing them to execute arbitrary code on the server and potentially gain control of other systems within the network. This could involve exploiting vulnerabilities in other applications or services running on the network. Additionally, the attacker could use the server to store stolen data, making it easier to exfiltrate information without raising suspicion. They could also use it as a command-and-control server to manage a botnet, launching attacks against other targets. The possibilities are vast and depend heavily on the attacker’s skills and resources. Consider a scenario where an attacker uses a compromised file server to scan the internal network for other vulnerabilities, then exploits those weaknesses to gain access to even more sensitive systems. The initial compromise acts as a catalyst for further attacks.

Mitigation Strategies and Best Practices: Poc Exploit Http File Server

Securing your HTTP file server isn’t just about preventing embarrassing leaks; it’s about protecting sensitive data and maintaining your online reputation. A compromised file server can lead to significant financial losses, legal repercussions, and damage to your brand. Implementing robust security measures is crucial for preventing exploitation and maintaining the integrity of your systems. This section Artikels key strategies and best practices to fortify your HTTP file server against common attacks.

Effective security relies on a multi-layered approach. It’s not enough to rely on a single solution; a combination of preventative measures, regular monitoring, and incident response planning is essential for a truly secure environment. Think of it like building a castle: you need strong walls, a vigilant guard, and a well-defined escape route in case of a breach.

Best Practices for Securing HTTP File Servers

Implementing these best practices will significantly reduce the risk of exploitation. They cover a range of security aspects, from basic configuration to advanced security measures.

- Restrict Access: Limit access to the file server using strong passwords and multi-factor authentication. Implement role-based access control (RBAC) to grant only necessary permissions to users and groups.

- Regular Updates and Patching: Keep the server’s operating system, web server software, and any related applications up-to-date with the latest security patches. Vulnerabilities are constantly being discovered, and timely patching is crucial.

- Firewall Configuration: Configure a firewall to restrict access to the file server from unauthorized networks and IP addresses. Only allow necessary ports and protocols.

- Input Validation: Implement robust input validation to sanitize user inputs and prevent injection attacks such as SQL injection or cross-site scripting (XSS).

- Regular Security Audits: Conduct regular security audits and penetration testing to identify and address vulnerabilities before they can be exploited.

- Secure File Permissions: Configure appropriate file permissions to restrict access to sensitive files and directories. Ensure that only authorized users and processes have read and write access.

- HTTPS Encryption: Always use HTTPS to encrypt communication between the client and the server. This protects data in transit from eavesdropping and tampering.

Specific Security Measures to Prevent Exploitation Techniques

Addressing specific vulnerabilities requires targeted security controls. The following measures address common exploitation vectors.

- Directory Traversal Prevention: Implement robust checks to prevent directory traversal attacks, which allow attackers to access files outside the intended directory. This often involves careful input sanitization and validation.

- Denial of Service (DoS) Mitigation: Implement rate limiting and other techniques to prevent denial-of-service attacks that overwhelm the server and make it unavailable to legitimate users.

- Cross-Site Scripting (XSS) Prevention: Use appropriate encoding and output escaping techniques to prevent XSS attacks. This involves sanitizing user-supplied data before displaying it on the web page.

- SQL Injection Prevention: Use parameterized queries or stored procedures to prevent SQL injection attacks. Never directly embed user-supplied data into SQL queries.

Security Plan for an HTTP File Server

A comprehensive security plan combines preventative measures, monitoring, and response procedures. This plan Artikels key elements for a secure HTTP file server.

- Risk Assessment: Identify potential threats and vulnerabilities specific to the file server and the data it stores.

- Security Controls Implementation: Implement the best practices and security measures described above.

- Monitoring and Logging: Implement robust logging and monitoring to detect suspicious activity and potential security breaches.

- Incident Response Plan: Develop a detailed incident response plan to handle security incidents effectively and minimize damage.

- Regular Security Reviews: Conduct regular reviews of the security plan and controls to ensure their effectiveness and adapt to evolving threats.

Common Vulnerabilities and Mitigation Strategies

This table summarizes common vulnerabilities and their corresponding mitigation strategies.

| Vulnerability | Description | Mitigation Strategy | Example |

|---|---|---|---|

| Directory Traversal | Attackers access files outside intended directory. | Input validation, path canonicalization | Validate user-supplied file paths to prevent “../” sequences. |

| Cross-Site Scripting (XSS) | Malicious scripts injected into web pages. | Output encoding, input sanitization | Encode user inputs before displaying them on web pages. |

| SQL Injection | Malicious SQL code injected into database queries. | Parameterized queries, stored procedures | Use prepared statements instead of directly embedding user inputs into SQL queries. |

| Denial of Service (DoS) | Server overwhelmed by malicious traffic. | Rate limiting, load balancing | Implement mechanisms to limit requests from a single IP address or source. |

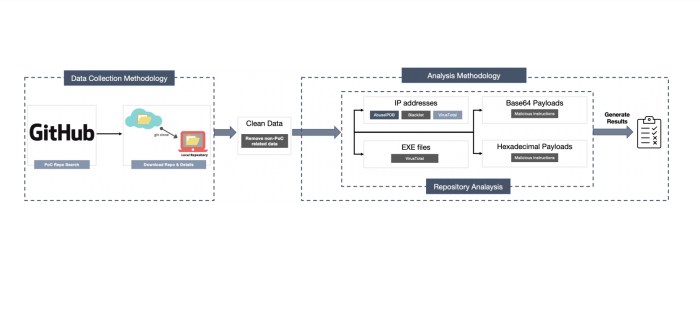

Case Studies of Real-World Exploits

Understanding the theoretical vulnerabilities of HTTP file servers is crucial, but real-world examples paint a clearer picture of the potential damage and the attacker’s methods. Analyzing past incidents allows us to learn from mistakes and implement better security practices. This case study details a significant breach, illustrating the consequences of neglecting basic security measures.

A large organization, managing a substantial amount of sensitive data, relied on an internal HTTP file server for document sharing. This server, while seemingly innocuous, lacked fundamental security configurations. The organization failed to implement robust authentication and authorization mechanisms, leaving the server accessible to anyone who knew its IP address and directory structure. This oversight proved catastrophic.

Vulnerability Exploited: Lack of Authentication and Authorization

The primary vulnerability was the complete absence of authentication and authorization. Anyone could access the server anonymously, download files, and potentially even upload malicious content. This lack of basic security controls allowed attackers to easily exploit the server. The server’s directory listing feature further exacerbated the problem, providing an attacker with a clear view of the available files and their structure, making targeting specific sensitive information straightforward.

Attacker’s Actions

The attacker, likely through reconnaissance techniques, discovered the existence of the vulnerable HTTP file server. They then directly accessed the server using its IP address. The absence of any authentication meant no login credentials were required. The attacker proceeded to browse the directory structure, identifying sensitive documents such as financial reports, customer databases, and internal communications. These documents were freely downloadable, allowing the attacker to exfiltrate a significant amount of data. The attacker could have potentially also uploaded malicious files, further compromising the organization’s network, but this wasn’t documented in this particular incident.

Impact and Mitigation

The data breach resulted in significant financial losses for the organization, reputational damage, and legal ramifications. The compromised data included sensitive personal information, requiring the organization to comply with data breach notification laws and face potential legal action from affected individuals. To mitigate the damage, the organization immediately took the server offline, conducted a thorough security audit, implemented strong authentication and authorization mechanisms, and engaged forensic experts to investigate the extent of the breach. They also implemented stricter access control lists and regular security updates, along with employee training on security best practices. The incident served as a stark reminder of the importance of basic security configurations and the devastating consequences of neglecting them.

Closure

Source: itmagazine.ch

So, there you have it: a comprehensive look into the dark art of exploiting HTTP file servers. While the potential for damage is significant, the good news is that many of these vulnerabilities are preventable. By understanding the attack vectors, implementing robust security measures, and staying vigilant, you can significantly reduce your risk. Remember, proactive security is the best defense against these sophisticated threats. Don’t wait for an attack – prepare for it.