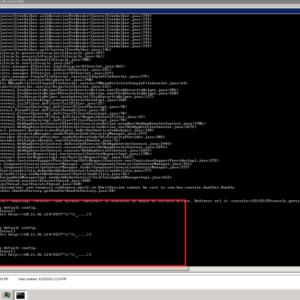

Multiple flaws Splunk Enterprise? Yeah, we’ve dug deep into the issues plaguing this popular SIEM. From crippling security vulnerabilities in versions pre-8.x to performance bottlenecks caused by improper configuration, we’re exposing the cracks in the seemingly impenetrable fortress. Think insufficient access controls, slow search queries, and scalability nightmares – we’re pulling back the curtain on it all.

This isn’t just another tech complaint; it’s a survival guide for anyone relying on Splunk Enterprise. We’ll break down common problems, offer practical solutions, and even provide some preventative measures. Get ready to optimize your Splunk setup and avoid the headaches (and potential security breaches) that come with ignoring these critical flaws.

Commonly Reported Flaws in Splunk Enterprise

Source: splunk.com

Splunk Enterprise, while a powerful security information and event management (SIEM) tool, isn’t immune to vulnerabilities. Like any complex software, it has seen its share of security flaws and configuration issues that, if left unaddressed, can significantly impact its effectiveness and security posture. Let’s dive into some of the commonly reported problems in versions prior to 8.x and explore mitigation strategies.

Prevalent Security Vulnerabilities in Splunk Enterprise (Pre-8.x)

Several security vulnerabilities plagued Splunk Enterprise versions before the 8.x release. These vulnerabilities, if exploited, could have allowed unauthorized access to sensitive data or even system compromise. Understanding these flaws is crucial for maintaining a secure Splunk environment.

| Vulnerability Type | Impact | Mitigation Strategies |

|---|---|---|

| Cross-Site Scripting (XSS) | Allows attackers to inject malicious scripts into Splunk web interfaces, potentially stealing user credentials or performing other malicious actions. Imagine an attacker injecting a script that redirects users to a phishing site disguised as the legitimate Splunk login page. | Regularly update Splunk to the latest version. Implement robust input validation and output encoding to sanitize user inputs. Utilize a web application firewall (WAF) to filter malicious traffic. |

| SQL Injection | Attackers could exploit vulnerabilities in Splunk’s database interactions to execute arbitrary SQL commands, potentially gaining unauthorized access to data or even compromising the entire database. A successful attack could lead to data exfiltration or database corruption. | Use parameterized queries to prevent SQL injection attacks. Regularly patch Splunk to address known vulnerabilities. Employ database-level security measures, such as access control lists (ACLs) and auditing. |

| Unauthenticated Access to APIs | Some APIs in older Splunk versions might have lacked proper authentication, allowing attackers to access sensitive data or perform administrative actions without needing valid credentials. This could lead to unauthorized data access, configuration changes, or even complete system takeover. | Ensure all APIs are properly secured with authentication and authorization mechanisms. Regularly review API access permissions and restrict access to only authorized users and applications. Implement robust logging and monitoring of API activity. |

Impact of Insufficient Access Control

Insufficient access control in Splunk Enterprise is a major concern. Without proper controls, unauthorized users could access sensitive data, modify configurations, or even disrupt Splunk operations. For instance, a junior analyst with excessive privileges could inadvertently delete critical logs or alter system settings, leading to significant operational disruptions or security breaches. This highlights the importance of implementing the principle of least privilege – granting users only the necessary access rights for their roles.

Impact of Improper Configuration on Performance

Improper configuration of Splunk Enterprise can lead to significant performance bottlenecks. This can manifest as slow search speeds, high resource utilization, and even system crashes. For example, improperly configured indexing settings, such as using overly broad indexer configurations or failing to properly manage data volume, can lead to significant performance degradation. Similarly, inadequate resource allocation can cause the system to become overwhelmed, resulting in slow responses and potential instability.

Performance Issues and Optimization Strategies: Multiple Flaws Splunk Enterprise

Splunk Enterprise, while a powerful tool for log analysis and security monitoring, can become sluggish under heavy loads. Understanding and addressing performance bottlenecks is crucial for maintaining a responsive and efficient system. This section dives into common performance issues and provides practical strategies for optimization. We’ll explore indexing, configuration for large data, slow search queries, and common causes of degradation, offering actionable steps to improve your Splunk experience.

Optimizing Splunk Enterprise Indexing Performance

Efficient indexing is the cornerstone of a performant Splunk deployment. Slow indexing directly impacts search speed and overall system responsiveness. A step-by-step approach to optimization involves carefully considering data volume, indexing strategy, and resource allocation.

- Assess Data Volume and Velocity: Before any optimization, understand the volume and rate of data ingestion. High-volume, high-velocity data streams require a more robust indexing strategy. Tools within Splunk itself can help visualize this data inflow.

- Optimize Data Input: Ensure your data inputs are configured correctly. Using efficient input methods, like TCP, UDP, or using the Splunk HTTP Event Collector (HEC) rather than less efficient methods, reduces overhead. Properly configuring data source types (e.g., specifying timestamps accurately) is also critical.

- Index Configuration: Choose appropriate indexing settings. Consider factors like the number of indexes, index replication, and hot-warm-cold storage tiers. Overly large indexes can hinder performance. Smaller, more focused indexes often perform better. Employing a tiered approach allows for efficient management of data based on its age and importance.

- Resource Allocation: Allocate sufficient resources (CPU, memory, disk I/O) to your indexers. Monitor resource utilization closely. Insufficient resources can lead to significant indexing slowdowns. Consider using dedicated hardware for indexing, especially with high data volumes.

- Data Transformation and Filtering: Use props.conf and transforms.conf to filter out unnecessary data *before* indexing. Reducing the volume of data indexed significantly improves performance. This is often more efficient than filtering during search.

Configuring Splunk Enterprise for Large Data Volumes

Handling massive data volumes efficiently requires careful planning and configuration. Strategies focus on optimizing resource allocation, data retention policies, and utilizing Splunk’s features for scalable data management.

- Distributed Indexing: For extremely large datasets, consider using distributed indexing. This distributes the indexing workload across multiple indexer clusters, significantly improving throughput and scalability.

- Data Retention Policies: Implement a robust data retention policy to manage disk space. Archive or delete old data that is no longer needed. This prevents indexes from growing excessively large, maintaining search performance.

- Index Replication: Replicate indexes to provide redundancy and high availability. While increasing storage requirements, replication ensures data accessibility even in case of hardware failure, maintaining business continuity.

- Data Summarization: Use features like the `summarize` command in searches to reduce the amount of data processed, thereby accelerating query execution. This is particularly useful for long-term trend analysis.

Causes and Solutions for Slow Search Queries

Slow search queries can severely impact the usability of Splunk. Identifying the root causes and implementing appropriate solutions is essential.

- Inefficient Search Queries: Poorly written search queries are a common culprit. Avoid using wildcard characters (*) excessively and utilize efficient search operators and commands to refine your queries.

- Overly Broad Searches: Searching across vast time ranges or many indexes without proper filtering can lead to significant slowdowns. Narrowing the search scope is often the simplest solution.

- Lack of Indexing Optimization: As mentioned previously, inefficient indexing directly impacts search performance. Addressing indexing issues as Artikeld above is crucial.

- Resource Constraints: Insufficient resources (CPU, memory) on the search head can lead to slow query execution. Monitoring resource utilization and upgrading hardware if necessary is essential.

- Data Volume and Complexity: The sheer volume and complexity of data indexed can affect search speed. Efficient data modeling, filtering, and summarization can help mitigate this.

Common Causes of Splunk Enterprise Performance Degradation

Several factors contribute to Splunk Enterprise performance degradation. Proactive monitoring and addressing these issues can prevent major performance problems.

- Insufficient Hardware Resources: Lack of sufficient CPU, memory, and disk I/O on indexers and search heads.

- Inefficient Data Input Configuration: Using inefficient data inputs or improperly configured data sources.

- Poorly Designed Indexes: Overly large indexes, lack of index replication, or inadequate hot-warm-cold storage tiers.

- Unoptimized Search Queries: Inefficient search queries that consume excessive resources.

- Excessive Data Volume: Ingesting more data than the system can efficiently handle.

- Lack of Regular Maintenance: Failing to perform regular maintenance tasks such as log rotation, index cleanup, and system updates.

Scalability Challenges and Solutions

Splunk Enterprise, while a powerful log management and security information and event management (SIEM) platform, faces scalability hurdles as data volumes explode. Understanding these limitations and implementing appropriate architectural strategies is crucial for maintaining performance and avoiding system overload. Ignoring these challenges can lead to significant performance degradation, impacting real-time monitoring and analysis capabilities.

Splunk’s inherent architecture, while robust, has limitations when dealing with massive data ingestion rates and storage requirements. The indexed data model, while effective for smaller deployments, can become a bottleneck as data volume grows exponentially. This can manifest as slow search performance, increased latency, and ultimately, system instability. Furthermore, the single-instance architecture of a basic Splunk deployment is not designed for high availability or the processing of truly massive datasets.

Limitations of Splunk Enterprise Scaling

The primary scalability challenge with Splunk Enterprise stems from its reliance on a centralized indexer. As the volume of ingested data increases, this single point of failure becomes overloaded, leading to performance bottlenecks. Resource contention, both CPU and memory, becomes a significant issue, impacting search speed and potentially causing the system to become unresponsive. Furthermore, the sheer storage capacity required to accommodate exponentially growing data can become prohibitively expensive and complex to manage. Consider a scenario where a company’s log data doubles every year. A single indexer architecture would quickly become unsustainable, requiring constant upgrades and potentially leading to data loss or analysis delays.

Architectural Approaches for Scaling Splunk Enterprise

Several architectural approaches address Splunk’s scalability challenges. A common strategy involves implementing a distributed environment using multiple indexers and search heads. This distributes the workload, improving performance and resilience. Another approach is leveraging Splunk Cloud, a managed service that handles infrastructure scaling and maintenance. Finally, techniques like data volume reduction (through techniques such as data filtering and summarization) and optimized indexing configurations are crucial for managing the overall data footprint.

High-Availability Architecture for Splunk Enterprise

A high-availability architecture for Splunk Enterprise is essential for minimizing downtime and ensuring continuous operation. This typically involves deploying redundant indexers, search heads, and deployment servers. Load balancing across these components distributes the workload and prevents single points of failure. Furthermore, implementing a robust backup and recovery strategy is crucial to mitigate the impact of unexpected failures. This could involve regular backups of the indexer data, as well as failover mechanisms that automatically switch to a redundant system in case of an outage. A geographically distributed architecture can also enhance resilience against regional outages.

Utilizing Splunk’s Distributed Environment Features

Splunk’s distributed environment capabilities are key to achieving scalability. By deploying multiple indexers, each responsible for a subset of the overall data volume, the workload is effectively shared. This parallel processing significantly improves search performance and reduces latency. Similarly, distributing search heads allows for parallel processing of searches, further enhancing performance and enabling users to access results quickly, even with massive datasets. Effective configuration of indexer clustering and data replication is crucial for optimizing this distributed environment, ensuring data consistency and availability. Careful consideration of data partitioning strategies is also essential to ensure efficient data distribution across the indexers.

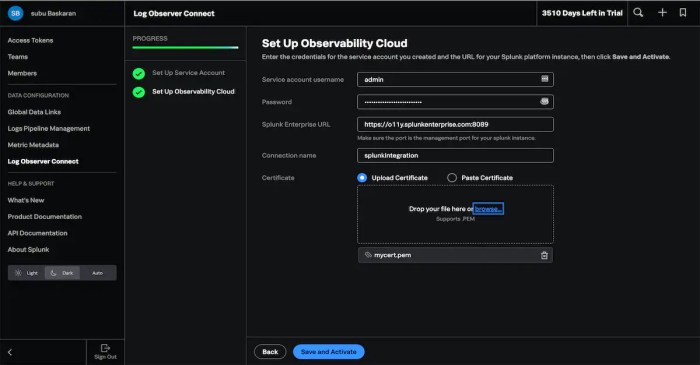

Integration and Compatibility Problems

Source: splunk.com

Splunk Enterprise, while powerful, isn’t an island. Its effectiveness hinges on seamless integration with your existing security infrastructure. However, connecting Splunk to other tools and diverse operating systems can present significant hurdles, impacting data ingestion, analysis, and overall security posture. Let’s dive into the common pitfalls and strategies for smoother integration.

Successfully integrating Splunk Enterprise with other security tools and diverse operating systems requires careful planning and execution. Understanding potential challenges beforehand and having a robust troubleshooting methodology is crucial for a seamless deployment and efficient data management.

Potential Integration Challenges with Other Security Tools

Connecting Splunk with other security tools like SIEMs, firewalls, and endpoint detection and response (EDR) systems often involves configuring APIs, adapting data formats, and ensuring consistent data flow. Mismatched data formats, authentication issues, and network connectivity problems are frequent culprits. For example, integrating with a firewall might require translating proprietary log formats into a Splunk-compatible format, which can be time-consuming and prone to errors if not carefully handled. Another common challenge is ensuring the security of the connection between Splunk and external systems, requiring careful consideration of authentication protocols and access control lists. Insufficient bandwidth can also severely impact data transfer rates, leading to incomplete data and delayed analysis.

Troubleshooting Connectivity Issues with External Data Sources

Troubleshooting connectivity issues starts with verifying network connectivity between Splunk and the external data source. This involves checking firewall rules, verifying IP address accessibility, and ensuring that ports required for communication are open. Next, examine the Splunk configuration files, focusing on the inputs and outputs related to the external data source. Common errors include incorrect hostnames, port numbers, or authentication credentials. Using Splunk’s built-in tools, such as the `inputs` command, can help diagnose connection problems and identify error messages. Analyzing logs from both Splunk and the external data source is also crucial for pinpointing the root cause. Consider testing the connection using a simple tool like `telnet` or `nc` to confirm basic network reachability before delving into more complex Splunk configurations.

Resolving Compatibility Issues Between Splunk Enterprise and Different Operating Systems

Splunk Enterprise supports a range of operating systems, but compatibility issues can still arise. These issues might stem from differences in libraries, system calls, or even file system permissions. For instance, deploying Splunk on a less common Linux distribution might require installing specific packages or adjusting configuration files to ensure proper functionality. Similarly, differences in user permissions between operating systems can impact Splunk’s ability to access data sources or write to log files. Thorough testing on the target operating system before full deployment is essential to avoid unexpected problems. Referencing Splunk’s official documentation for OS-specific requirements and best practices is vital for mitigating compatibility problems. Employing a consistent approach to user management across all connected systems can help to avoid permission-related issues.

Troubleshooting Integration Problems Flowchart

The following describes a flowchart illustrating the troubleshooting process:

Start -> Check Network Connectivity (Ping, Traceroute) -> Successful? Yes: Proceed to Step 2; No: Investigate Network Issues (Firewall Rules, DNS Resolution) -> Step 2: Verify Splunk Configuration (Inputs, Outputs, Authentication) -> Correct Configuration? Yes: Proceed to Step 3; No: Correct Configuration Errors -> Step 3: Examine Splunk and External System Logs -> Identify Root Cause? Yes: Resolve Issue; No: Escalate to Support.

Data Integrity and Management Concerns

Splunk Enterprise, while a powerful tool for log management and security information and event management (SIEM), isn’t immune to data integrity issues. The sheer volume of data it handles, coupled with potential configuration errors and environmental factors, creates vulnerabilities that can compromise the accuracy and reliability of your insights. Understanding these risks and implementing proactive management strategies is crucial for maintaining a healthy and trustworthy Splunk environment.

Data corruption in Splunk can manifest in several ways, from subtle inaccuracies to complete data loss. One common cause is network instability during data ingestion. Interrupted data streams can lead to incomplete or truncated events, rendering them useless for analysis. Another potential source of corruption stems from incorrect configuration of indexing settings, such as improperly configured data transforms or improperly handled events. This can result in data being misclassified, incorrectly parsed, or even dropped entirely. Furthermore, hardware failures, such as disk errors or storage array issues, can directly corrupt indexed data, leading to significant data loss. Finally, human error, like accidental data deletion or incorrect data manipulation through Splunk commands, can also contribute to data integrity problems.

Potential Data Corruption Issues and Root Causes

Data corruption in Splunk can stem from various sources. Network issues during data ingestion, leading to incomplete or truncated events, are a frequent culprit. Incorrect indexing configurations, including faulty data transforms or improper event handling, can misclassify, misinterpret, or even drop data. Hardware failures, such as disk errors or storage array problems, can directly damage indexed data. Finally, human error, such as accidental deletion or manipulation, remains a significant risk factor. These issues can lead to inaccurate analysis, flawed security alerts, and ultimately, compromised decision-making.

Best Practices for Maintaining Data Integrity in Splunk Enterprise Environments

Maintaining data integrity requires a multi-faceted approach. Regularly reviewing and optimizing Splunk’s indexing pipeline is essential. This includes verifying data transforms, ensuring proper event parsing, and checking for any errors or warnings in the Splunk logs. Implementing robust network monitoring and redundancy can mitigate network-related data corruption. Data checksums and validation checks during ingestion can further enhance data integrity. Furthermore, a well-defined data governance policy, including clear data ownership and access controls, can minimize the risk of human error. Finally, rigorous testing of any Splunk configuration changes in a staging environment before deploying them to production can prevent unforeseen data integrity problems.

Strategies for Effective Data Backup and Recovery in Splunk Enterprise, Multiple flaws splunk enterprise

Effective data backup and recovery are crucial for mitigating the impact of data corruption or loss. A comprehensive backup strategy should include regular backups of the entire Splunk index, including both hot and cold data. Incremental backups, rather than full backups, can reduce storage needs and backup times. Offsite backups are highly recommended to protect against physical disasters. The recovery process should be thoroughly tested regularly to ensure its effectiveness. Splunk’s built-in features, along with third-party backup solutions, can facilitate this process. A clear and well-documented recovery plan, including roles and responsibilities, is essential for a smooth and efficient recovery in the event of data loss.

Managing Data Retention Policies within Splunk Enterprise

Data retention policies are critical for managing storage costs and compliance requirements. Splunk allows for fine-grained control over data retention, enabling administrators to define retention periods for different indexes based on their importance and regulatory needs. Regular review and optimization of these policies are necessary to prevent excessive storage consumption. Automated processes can be implemented to automatically delete data that has reached its retention period. However, careful planning is needed to avoid accidentally deleting crucial data. Understanding the implications of data retention policies on reporting and analysis is crucial to ensure that sufficient data is retained for ongoing investigations and compliance auditing. The implementation of a robust data lifecycle management strategy, encompassing data archiving and deletion, is paramount.

User Experience and Interface Issues

Splunk Enterprise, while a powerful tool for data analysis, often presents usability hurdles for users of varying technical expertise. The sheer volume of data, the complexity of search queries, and the sometimes overwhelming interface can lead to frustration and inefficiencies. Addressing these user experience challenges is crucial for maximizing the platform’s potential and ensuring user adoption.

Many users find the initial learning curve steep, particularly when navigating the multitude of features and options available. The customization options, while extensive, can be confusing for those unfamiliar with the platform’s architecture. Furthermore, poorly designed dashboards and visualizations can obscure insights instead of revealing them, hindering effective data analysis and decision-making.

Common Usability Challenges in Splunk Enterprise

Splunk’s interface, while powerful, can be overwhelming for new users. Common challenges include navigating the complex search language, understanding the various visualization options, and effectively managing large datasets. Poorly designed dashboards can lead to information overload, making it difficult to identify key trends and patterns. The lack of intuitive guidance and contextual help within the interface can also contribute to user frustration. For instance, a user might struggle to interpret complex visualizations without sufficient context or clear labeling. The system’s reliance on a command-line interface for some functions can also be a barrier for users accustomed to more graphical interfaces.

Strategies for Improving the Splunk Enterprise User Experience

Improving the user experience requires a multifaceted approach. This includes providing comprehensive training resources, creating intuitive dashboards and visualizations, and simplifying the search process. Clearer documentation, interactive tutorials, and online support communities can significantly reduce the learning curve. The implementation of user-friendly features, such as drag-and-drop functionality for dashboard creation and simplified search syntax options, would enhance accessibility. Furthermore, integrating contextual help and tooltips within the interface can guide users through complex tasks and prevent common errors. Regular user feedback should be actively sought and incorporated into the design and development process to ensure continuous improvement.

Best Practices for Creating Effective Dashboards and Visualizations

Effective dashboards and visualizations are essential for conveying complex data insights in a clear and concise manner. Key best practices include focusing on a limited number of key performance indicators (KPIs), using appropriate chart types for the data being presented, and ensuring clear labeling and annotation. Visual consistency across dashboards improves usability. Color palettes should be chosen carefully to avoid confusion and to highlight important trends. Interactive elements, such as drill-down capabilities, allow users to explore data in more detail. For example, a dashboard monitoring website traffic might use a line graph to show website visits over time, a bar chart to compare traffic from different sources, and a map to visualize geographic distribution of visitors. Each visualization should be clearly labeled with relevant titles, axes labels, and legends.

- Prioritize key performance indicators (KPIs) and focus on presenting only essential information.

- Select appropriate chart types for the data being presented (e.g., line graphs for trends, bar charts for comparisons).

- Use clear and concise labels, titles, and legends to ensure data is easily understood.

- Maintain visual consistency across all dashboards to improve user experience.

- Employ a consistent and appropriate color palette to highlight key trends and patterns.

- Incorporate interactive elements, such as drill-down capabilities, to enable deeper data exploration.

- Regularly review and update dashboards to reflect changes in business needs and data sources.

Last Word

Source: bigcommerce.com

So, there you have it – the nitty-gritty on Splunk Enterprise’s hidden flaws. While it’s a powerful tool, understanding its weaknesses is crucial for maximizing its potential and minimizing its risks. From bolstering security to optimizing performance and scaling effectively, proactive management is key. Don’t let these issues slow you down; use this information to build a more robust and efficient Splunk environment.