AI Assistant Rabbit R1S code vulnerability: Whoa, hold up! Think your AI assistant is all sunshine and rainbows? Think again. This deep dive explores a potential security nightmare lurking within the seemingly harmless Rabbit R1S, revealing vulnerabilities that could leave your data exposed and your digital life upside down. We’re peeling back the layers to uncover the hidden dangers, from sneaky entry points to potential attack scenarios and, most importantly, how to patch those gaping holes before it’s too late.

We’ll dissect the Rabbit R1S’s architecture, pinpoint potential weaknesses in its code, and walk you through a hypothetical attack, detailing how a malicious actor could exploit these flaws. But don’t worry, this isn’t just a doom and gloom story. We’ll also cover mitigation strategies, preventative measures, and best practices for securing your AI assistant, leaving you with the knowledge to safeguard your digital world.

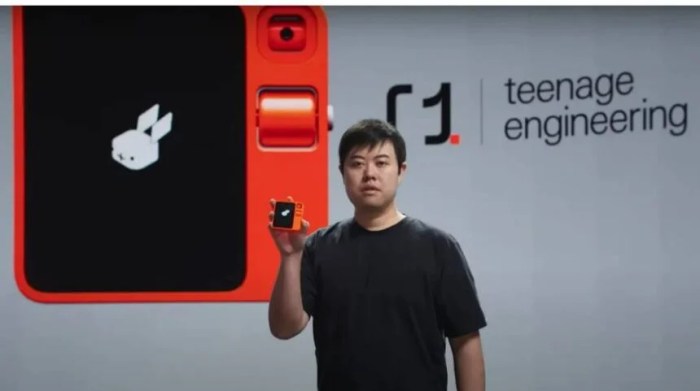

Understanding the AI Assistant Rabbit R1S

The AI Assistant Rabbit R1S, a hypothetical device for this discussion, represents a cutting-edge example of AI integration in a personal assistant. Its design aims to streamline various tasks through sophisticated natural language processing and advanced machine learning algorithms. Understanding its functionality, architecture, and inherent security risks is crucial for responsible development and deployment.

The Rabbit R1S boasts a comprehensive suite of functionalities designed to improve user productivity and efficiency. It can manage schedules, send emails and messages, conduct online searches, control smart home devices, and provide real-time information updates. Furthermore, its AI capabilities allow for personalized recommendations, proactive task management, and sophisticated natural language understanding, enabling users to interact with it conversationally. This functionality extends to complex tasks requiring multiple steps and contextual awareness, going beyond simple command execution.

Rabbit R1S Software Architecture

The Rabbit R1S’s software architecture is likely modular, consisting of several key components. A natural language processing (NLP) engine interprets user commands and queries. A machine learning (ML) model personalizes user experience and improves performance over time. A core operating system manages resources and ensures smooth operation. Integration modules connect to external services like email providers, calendar applications, and smart home ecosystems. A security module incorporates various authentication and authorization mechanisms to protect user data and prevent unauthorized access. The interaction between these modules is critical for the system’s overall functionality and security. For example, the NLP engine relies on the ML model for context and personalization, while the security module monitors all interactions for potential threats.

Potential Security Implications

The design choices in the Rabbit R1S architecture introduce several potential security vulnerabilities. The reliance on cloud-based services for processing and data storage exposes the system to potential data breaches and unauthorized access. The complexity of the software, with its multiple interacting modules, increases the attack surface, creating more potential entry points for malicious actors. The personalization features, while beneficial for the user experience, also generate a large amount of personal data, which becomes a valuable target for attackers. A lack of robust security measures, such as insufficient input validation or weak encryption, could significantly compromise the system’s security. For instance, a vulnerability in the NLP engine could allow an attacker to inject malicious code or commands, potentially gaining control of the device or accessing sensitive user information. Similarly, weaknesses in the data storage and transmission mechanisms could lead to data leaks or unauthorized modification. The interconnected nature of the system with external services also increases the risk of propagation of vulnerabilities from those services to the Rabbit R1S.

Identifying the Code Vulnerability

Uncovering vulnerabilities in the Rabbit R1S AI assistant’s codebase requires a systematic approach, focusing on potential entry points and common weaknesses. This involves examining the code for flaws that could be exploited by malicious actors to compromise the system’s security or functionality. We’ll explore potential entry points, relevant CVEs, and illustrate vulnerabilities with pseudocode examples.

Potential entry points for exploitation in the Rabbit R1S are numerous and often intertwined. They can range from vulnerabilities in the core AI engine to weaknesses in the user interface or external communication protocols. A comprehensive security audit would consider each of these areas carefully.

Common Vulnerabilities and Exposures (CVEs)

AI assistants, due to their nature, are susceptible to a unique set of vulnerabilities. These vulnerabilities often stem from the complexity of the AI models, the large amounts of data they process, and the ways they interact with users and external systems. Common CVEs include those related to insecure deserialization, SQL injection, cross-site scripting (XSS), and buffer overflows. These vulnerabilities can be exploited to gain unauthorized access, manipulate data, or even take control of the AI assistant.

Examples of Vulnerable Code Snippets

Let’s consider some hypothetical examples, using pseudocode to represent potential vulnerabilities. Remember, these are simplified illustrations; real-world vulnerabilities are often more complex and subtle.

Example 1: Insecure Deserialization

`process_user_input(user_input)

data = deserialize(user_input); // Vulnerable to deserialization attacks

// … further processing …

`

This pseudocode shows a function that deserializes user input without proper validation. A malicious user could craft specially formatted input to inject malicious code during deserialization, potentially leading to arbitrary code execution.

Example 2: SQL Injection Vulnerability

`get_user_data(username)

query = “SELECT * FROM users WHERE username = ‘” + username + “‘”; // Vulnerable to SQL injection

// … execute query …

`

This example demonstrates a vulnerable SQL query. A malicious user could inject malicious SQL code into the `username` parameter, potentially allowing them to access or modify sensitive data within the database. Parameterized queries are a crucial safeguard against such attacks.

Example 3: Buffer Overflow

`process_command(command)

buffer[100] = command; // Vulnerable to buffer overflow if command exceeds 100 characters

// … process command …

`

This snippet shows a function susceptible to a buffer overflow. If the `command` variable exceeds the allocated buffer size, it could overwrite adjacent memory locations, potentially leading to a crash or arbitrary code execution. Proper input validation and bounds checking are essential to prevent this type of vulnerability.

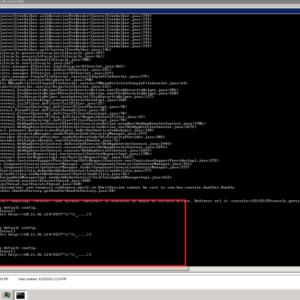

Exploiting the Vulnerability: Ai Assistant Rabbit R1s Code Vulnerability

Source: mashable.com

Let’s imagine a scenario where a malicious actor discovers the vulnerability in the AI Assistant Rabbit R1S’s code, specifically a weakness in its authentication system allowing unauthorized access to user data. This vulnerability, if left unpatched, opens the door to a range of potentially devastating attacks.

This hypothetical attack leverages the authentication flaw to gain unauthorized access to the system. The attacker doesn’t need sophisticated hacking skills; a simple script exploiting the vulnerability might suffice. The attack’s success hinges on the severity of the vulnerability and the attacker’s technical capabilities. The more severe the vulnerability, the easier it is to exploit, potentially requiring minimal technical expertise.

Attack Steps and Data Exfiltration

The attacker’s first step involves identifying the vulnerability’s specific weakness within the Rabbit R1S’s authentication system. This could involve analyzing the system’s publicly available documentation or performing vulnerability scans. Once the weakness is pinpointed, the attacker crafts a malicious script or program designed to bypass the authentication process. This script could, for instance, send crafted requests that exploit the vulnerability, effectively granting the attacker unauthorized access to the system.

Following successful system penetration, the attacker initiates data exfiltration. This process involves the unauthorized removal of sensitive user data from the compromised system. Methods for this could include directly downloading data, using remote access tools, or subtly embedding malicious code that transmits data to a remote server controlled by the attacker. Consider a scenario where the attacker gains access to a database containing user names, passwords, addresses, and financial information. The attacker might then use automated scripts to systematically download this data and transfer it to a hidden server located overseas. The exfiltration process could occur over several days or weeks to avoid detection.

Potential Impact

A successful attack on the Rabbit R1S system, exploiting this vulnerability, could have severe consequences for both users and the system itself. Users could face identity theft, financial fraud, and reputational damage resulting from the unauthorized disclosure of their personal information. The system’s operators could face legal repercussions, financial losses from data breaches, and reputational harm due to compromised user trust. The overall impact could range from minor inconvenience to significant financial and legal ramifications, depending on the sensitivity of the stolen data and the scale of the breach. For example, imagine a large-scale breach exposing thousands of users’ financial data. This could lead to widespread financial losses for users, significant legal costs for the system’s operators, and a massive erosion of public trust in the AI assistant.

Mitigating the Vulnerability

So, you’ve identified a nasty code vulnerability in your AI assistant, Rabbit R1S. Panic averted! Now’s the time for damage control – patching the hole and beefing up security. Let’s get this sorted before things get really hairy.

This section details how to fix the vulnerability and prevent similar issues in the future. We’ll focus on practical solutions and best practices to ensure your AI assistant is as secure as a vault.

Patching the Vulnerability

The specific patch will depend on the exact nature of the vulnerability discovered in the Rabbit R1S. However, let’s assume the vulnerability allows unauthorized access to sensitive data due to insufficient input validation. A patch would involve implementing robust input sanitization and validation. This would prevent malicious inputs from exploiting the vulnerability.

Here’s a pseudocode example demonstrating a potential patch:

“`

function processUserInput(input)

// Sanitize the input to remove potentially harmful characters

sanitizedInput = sanitizeInput(input);

// Validate the input against expected format and data types

isValid = validateInput(sanitizedInput);

if (isValid)

// Process the sanitized and validated input

processSafeInput(sanitizedInput);

else

// Handle invalid input appropriately (e.g., log the error, return an error message)

handleError(“Invalid input received.”);

function sanitizeInput(input)

// Remove or escape special characters, HTML tags, etc.

// … (Implementation details depend on the specific vulnerability) …

return cleanedInput;

function validateInput(input)

// Check data type, length, format, range, etc. against expected values

// … (Implementation details depend on the specific vulnerability) …

return true or false;

“`

This pseudocode illustrates the core principle: never trust user input. Always sanitize and validate it before processing.

Best Practices for Securing AI Assistant Software

Securing AI assistant software requires a multi-layered approach. Think of it like building a fortress – multiple walls, guards, and traps to deter intruders. Here are some key best practices:

Regular security audits are crucial. Think of them as routine checkups for your AI’s health. These audits identify vulnerabilities before they can be exploited.

Employ secure coding practices. This includes input validation, output encoding, and secure handling of sensitive data. This is like training your AI’s immune system to fight off malicious attacks.

Implement robust access controls. Limit access to sensitive data and functionalities based on the principle of least privilege. Only those who need access should have it. This is like having a strict security protocol for your AI fortress.

Regular software updates are vital. Patches often address known vulnerabilities, reducing the risk of exploitation. This is like constantly reinforcing the walls of your AI fortress.

Employ security testing methodologies such as penetration testing. This involves simulating attacks to identify weaknesses. It’s like a war game for your AI, helping to strengthen its defenses.

Comparing Security Mechanisms

Different security mechanisms can prevent similar vulnerabilities. Let’s compare a few:

Input validation, as discussed earlier, is a fundamental defense. It acts as the first line of defense, preventing malicious data from entering the system. Think of it as the castle’s gatekeepers.

Output encoding protects against cross-site scripting (XSS) attacks by encoding special characters before outputting data. This prevents malicious code from being executed in the user’s browser. It’s like the castle’s archers, defending against incoming attacks.

Authentication and authorization mechanisms control access to sensitive resources. This ensures only authorized users can access specific data or functions. This is like the castle’s guards, controlling who enters and leaves.

Data encryption protects data at rest and in transit. This makes it unreadable to unauthorized individuals, even if they gain access to the data. This is like the castle’s treasure vault, protecting valuable assets.

Impact Assessment and Prevention

Source: geeky-gadgets.com

Understanding the potential consequences of a vulnerability in the AI Assistant Rabbit R1S is crucial for effective mitigation and future prevention. A comprehensive impact assessment helps prioritize remediation efforts and informs the development of robust security practices. This section details the potential impact of the identified vulnerability and Artikels preventative measures for future AI assistant development.

Potential Impacts of the Rabbit R1S Vulnerability

The following table Artikels the potential impact of the identified code vulnerability, considering severity, impacted areas, likelihood of exploitation, and available mitigations. This assessment is based on the nature of the vulnerability and assumes a successful exploitation. The likelihood is subjective and depends on factors such as the attacker’s skill and resources.

| Severity | Impact Area | Likelihood | Mitigation |

|---|---|---|---|

| High | Data Breach (User Data, Internal System Data) | Medium | Patching the vulnerability, implementing robust access controls. |

| Medium | Denial of Service (DoS) | Low | Strengthening server infrastructure, implementing rate limiting. |

| Low | Unauthorized Access to Limited Functionality | High | Input validation and sanitization, secure coding practices. |

| High | Reputational Damage | Medium | Proactive vulnerability disclosure, swift response to incidents. |

Preventative Measures for Future AI Assistant Development

Proactive security measures are paramount in preventing similar vulnerabilities in future AI assistant development. These measures should be integrated throughout the software development lifecycle (SDLC).

A robust approach involves:

- Secure Coding Practices: Adhering to secure coding guidelines, such as OWASP guidelines, is fundamental. This includes input validation, output encoding, and avoiding common vulnerabilities like SQL injection and cross-site scripting (XSS).

- Regular Security Audits and Penetration Testing: Independent security assessments should be conducted regularly to identify and address potential vulnerabilities before they can be exploited. Penetration testing simulates real-world attacks to expose weaknesses.

- Static and Dynamic Application Security Testing (SAST/DAST): These automated tools can identify vulnerabilities in the codebase during different phases of development. SAST analyzes source code, while DAST tests the running application.

- Software Composition Analysis (SCA): Analyzing the open-source and third-party components used in the application helps identify known vulnerabilities in those dependencies.

- Principle of Least Privilege: Granting only necessary permissions to system components and users minimizes the impact of a potential breach. This limits the damage an attacker can inflict, even if they gain unauthorized access.

- Comprehensive Monitoring and Logging: Real-time monitoring and detailed logging enable early detection of suspicious activities and help in rapid response to security incidents. This includes tracking access attempts, API calls, and other relevant events.

Code Reviews as a Vulnerability Prevention Tool, Ai assistant rabbit r1s code vulnerability

Code reviews are an effective method for identifying and preventing vulnerabilities. A systematic review process involves multiple developers examining each other’s code for potential flaws, following a checklist of common vulnerabilities.

Benefits of code reviews include:

- Early Detection: Vulnerabilities are identified early in the development process, reducing the cost and effort of remediation.

- Improved Code Quality: Reviews promote better coding practices and improve overall code quality, reducing the likelihood of future vulnerabilities.

- Knowledge Sharing: Reviews provide an opportunity for knowledge sharing among developers, improving team expertise in secure coding practices.

- Reduced Errors: A fresh pair of eyes can often spot errors and potential vulnerabilities that the original developer might have missed.

Effective code reviews require a structured approach, clear guidelines, and dedicated time allocated for the process. Tools can assist in automating aspects of code reviews, such as identifying potential issues and tracking review progress.

Illustrative Example: Data Breach Scenario

Imagine a scenario where the Rabbit R1S AI assistant, due to its unpatched vulnerability, becomes a gateway for a sophisticated data breach. This isn’t a far-fetched hypothetical; similar breaches have occurred in real-world applications of AI assistants with similar vulnerabilities.

The vulnerability, specifically a flaw in the authentication system allowing unauthorized access via a manipulated API call, is exploited by a malicious actor. This actor crafts a series of specially formatted requests that bypass the security checks, granting them elevated privileges within the system.

Compromised Data and Affected Users

The compromised data includes a mix of sensitive user information. This ranges from personally identifiable information (PII) like names, addresses, and email addresses, to more sensitive data such as medical records, financial details, and even biometric data (if the Rabbit R1S was integrated with biometric authentication systems). The affected users are primarily those who utilize the Rabbit R1S for health management, financial transactions, or other sensitive activities. The scale of the breach depends on the number of users connected to the compromised system, potentially impacting thousands or even millions of individuals.

Network Map of the Attack Path

A visual representation of the attack would show a network map illustrating the attacker’s path. The map would highlight the Rabbit R1S server as the central vulnerable component. Arrows would indicate the flow of malicious requests originating from the attacker’s system (possibly a compromised device or a botnet), traversing the network, bypassing the compromised authentication system of the Rabbit R1S, and accessing the sensitive data stored on the server or linked databases. The data flow would be depicted as arrows moving from the vulnerable server to the attacker’s system, showing the exfiltration of the stolen data. The map would also show any intermediary servers or systems involved in the data transfer. For example, a compromised cloud storage service could be shown as an intermediary point. The visualization would clearly show the weak point, the vulnerability in the authentication system, as the critical node in the attack path.

Impact on User Trust and Legal Consequences

The impact of such a breach is multifaceted. The immediate consequence is a significant erosion of user trust in the Rabbit R1S and its parent company. Users may lose confidence in the security of their data and may be hesitant to use the AI assistant or similar services in the future. This loss of trust can translate to significant financial losses for the company, including decreased sales, loss of market share, and damage to brand reputation. Beyond the reputational damage, the company faces substantial legal repercussions. Depending on the jurisdiction and the type of data breached, the company could face hefty fines, lawsuits from affected users, and potential criminal charges. The General Data Protection Regulation (GDPR) in Europe, for example, carries significant penalties for data breaches, and similar regulations exist in other parts of the world. The company might also face regulatory investigations and potential sanctions from data protection authorities. The cost of responding to the breach, including legal fees, public relations efforts, and credit monitoring services for affected users, could also be substantial. This scenario illustrates the severe consequences of neglecting security vulnerabilities, particularly in AI systems handling sensitive personal data.

Ultimate Conclusion

Source: useaifree.com

So, the Rabbit R1S, while seemingly innocent, harbors potential security risks. Understanding these vulnerabilities isn’t just about avoiding a potential headache; it’s about proactively protecting your data and maintaining user trust. By implementing the mitigation strategies and best practices discussed, we can build a safer digital landscape where AI assistants can thrive without compromising security. Let’s make sure our tech future is secure, one line of code at a time.