Ai powereds detecting zero day – AI-Powered Detecting Zero-Day Exploits: Forget the Wild West of cybersecurity – the age of AI-powered defense is here. Zero-day exploits, those nasty surprises that leave even the most seasoned security pros scrambling, are finally meeting their match. This isn’t your grandpappy’s antivirus; we’re talking about algorithms that learn, adapt, and predict attacks before they even happen. Get ready to dive into the fascinating world where artificial intelligence is rewriting the rules of digital warfare.

Traditional security methods, like signature-based detection, are playing catch-up in the face of increasingly sophisticated zero-day attacks. These attacks leverage previously unknown vulnerabilities, making them incredibly difficult to detect and defend against. AI, however, offers a powerful alternative. By analyzing vast amounts of data, identifying patterns, and learning from past attacks, AI-powered systems can proactively identify and mitigate these threats before they cause significant damage. This proactive approach is a game-changer, moving cybersecurity from reactive to predictive.

AI-Powered Zero-Day Detection

Source: webflow.com

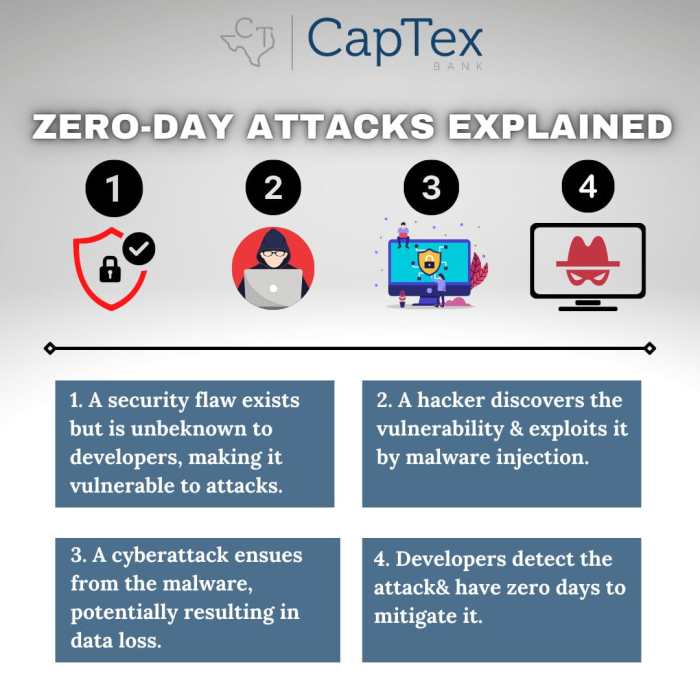

The digital landscape is a constant battlefield, with cybercriminals relentlessly seeking vulnerabilities to exploit. Zero-day exploits, attacks leveraging previously unknown software flaws, represent a particularly potent threat. These attacks can cripple systems, steal sensitive data, and cause significant financial and reputational damage before security patches are even available. The sheer speed and sophistication of these attacks highlight a critical gap in traditional security measures.

The limitations of traditional security methods in detecting zero-day threats are significant. Signature-based antivirus software, for example, relies on identifying known malware signatures, rendering it ineffective against entirely new threats. Intrusion detection systems (IDS) and firewalls, while valuable for detecting known attack patterns, struggle to identify the subtle anomalies indicative of zero-day exploits. These traditional methods are essentially playing catch-up, reacting to attacks rather than proactively preventing them. The sheer volume of software and the ever-evolving nature of cyberattacks make it increasingly difficult for traditional methods to keep pace.

The potential of AI in enhancing zero-day detection capabilities is transformative. AI algorithms, particularly machine learning models, can analyze vast amounts of network traffic, system logs, and application behavior to identify unusual patterns and anomalies that might indicate a zero-day attack. Unlike traditional methods that rely on pre-defined signatures, AI can learn to recognize malicious activity even in the absence of prior examples. This proactive approach allows for the detection and mitigation of threats before they can cause significant damage. Furthermore, AI can analyze code for vulnerabilities, potentially identifying zero-day exploits before they are even deployed in the wild.

Comparison of Traditional and AI-Powered Zero-Day Detection

| Feature | Traditional Methods (Signature-based, IDS/IPS) | AI-Powered Methods |

|---|---|---|

| Detection Mechanism | Relies on known signatures and pre-defined rules. | Analyzes patterns and anomalies in data; learns from examples. |

| Effectiveness against Zero-Days | Ineffective; relies on prior knowledge of threats. | Potentially highly effective; can identify unknown threats. |

| Response Time | Reactive; responds after an attack is detected. | Proactive; can potentially identify and mitigate threats before they cause damage. |

| Scalability | Can be challenging to scale to handle large datasets. | Highly scalable; can analyze vast amounts of data. |

AI Techniques for Zero-Day Detection

Source: cloudkul.com

The race to detect zero-day exploits – attacks leveraging previously unknown vulnerabilities – is a constant arms race between attackers and defenders. AI offers a powerful arsenal in this fight, providing the speed and adaptability needed to identify and respond to these threats before they cause significant damage. By analyzing vast amounts of data and identifying subtle patterns, AI algorithms can detect anomalies indicative of zero-day attacks far more efficiently than traditional methods.

AI’s ability to analyze massive datasets, identify complex patterns, and adapt to evolving attack strategies makes it an indispensable tool in modern cybersecurity. This allows security professionals to focus on more strategic tasks, rather than being bogged down in the tedious analysis of individual alerts. This increased efficiency translates directly into faster response times and minimized damage from successful attacks.

Machine Learning Algorithms for Zero-Day Detection

Several machine learning algorithms are particularly well-suited for identifying zero-day attacks. Anomaly detection algorithms, for example, excel at identifying unusual network traffic or system behavior that deviates from established baselines. These algorithms can flag potentially malicious activity even when they lack explicit examples of the specific attack. Deep learning models, with their ability to learn complex representations from raw data, can analyze network packets, system logs, and other data sources to identify subtle indicators of compromise that might be missed by simpler methods. For instance, a deep learning model might learn to identify patterns in network traffic associated with specific exploit techniques, even if those techniques are novel.

Natural Language Processing for Threat Intelligence

Natural language processing (NLP) plays a crucial role in analyzing unstructured data sources such as security logs and threat intelligence reports. By extracting key information and relationships from these sources, NLP can help identify potential zero-day attacks. For example, NLP can analyze security advisories to identify newly discovered vulnerabilities that could be exploited by attackers. It can also identify mentions of zero-day exploits in dark web forums or other online communities, providing early warning of potential threats. NLP algorithms can be used to analyze the language used in malware code, looking for patterns or anomalies that might indicate a novel attack technique.

Comparison of Supervised, Unsupervised, and Reinforcement Learning, Ai powereds detecting zero day

Supervised learning algorithms require labeled datasets of known attacks and normal behavior. While effective for known threats, their performance on zero-day attacks is limited by the lack of labeled examples. Unsupervised learning, on the other hand, can identify anomalies without labeled data, making it more suitable for zero-day detection. Algorithms like clustering and anomaly detection can identify unusual patterns in network traffic or system behavior that might indicate a zero-day attack. Reinforcement learning can be used to train agents that learn to detect and respond to zero-day attacks through interaction with a simulated environment. This approach allows for the development of adaptive systems that can learn to identify and respond to new attack techniques as they emerge.

Hypothetical AI Model Architecture for Zero-Day Detection

A robust AI model for zero-day detection could incorporate several components. A data ingestion layer would collect data from various sources, including network sensors, system logs, and threat intelligence feeds. A preprocessing layer would clean and normalize the data, preparing it for analysis. A feature extraction layer would extract relevant features from the data, such as network traffic patterns, system calls, and API usage. A model layer would employ a combination of machine learning algorithms, such as anomaly detection and deep learning, to identify potential zero-day attacks. A response layer would trigger appropriate actions, such as blocking malicious traffic or alerting security personnel. Finally, a feedback loop would continuously refine the model’s performance based on its detection accuracy and the characteristics of new attacks. This iterative process ensures that the model remains effective against evolving threats. For example, a real-world implementation might leverage anomaly detection to flag suspicious activity, followed by a deep learning model to analyze the flagged events and determine their severity. The system could then trigger automated responses, such as isolating infected systems, and provide alerts to security analysts for further investigation.

Data Sources and Feature Engineering for AI-Powered Systems

Building a robust AI-powered zero-day detection system hinges on the quality and diversity of the data used to train it, as well as how that data is processed and transformed into meaningful features. Think of it like baking a cake – you need the right ingredients (data) and the right recipe (feature engineering) to achieve a delicious result (accurate detection). Getting this right is crucial for effective zero-day threat hunting.

The effectiveness of any AI model relies heavily on the features it’s trained on. Poorly chosen or engineered features can lead to a model that’s inaccurate, inefficient, or even completely useless. Therefore, careful consideration of data sources and feature engineering is paramount.

Relevant Data Sources for Training

Zero-day detection requires a multifaceted approach to data collection. A single data source is unlikely to provide sufficient information for comprehensive threat identification. Instead, a combination of diverse data streams offers a more holistic view. This allows the AI to learn patterns and anomalies indicative of malicious activity that might be missed by analyzing a single source in isolation.

Feature Engineering for Effective Zero-Day Detection

Feature engineering is the process of transforming raw data into features that are more informative and useful for the AI model. This involves selecting relevant features, transforming them into a suitable format, and potentially creating new features by combining existing ones. The goal is to create features that are highly predictive of zero-day attacks while minimizing noise and irrelevant information. This step is crucial because raw data like network packets or system logs are often too complex and high-dimensional for a machine learning model to handle effectively.

Examples of Effective Features

A well-engineered feature set is the key to a successful zero-day detection system. Here are some examples of features that have proven effective:

- System Call Sequences: The order and frequency of system calls made by a process can reveal malicious behavior. Unusual sequences or unusually frequent calls to specific system calls can be strong indicators of a zero-day attack. For instance, a sudden surge in calls related to file system manipulation might suggest malware attempting to encrypt or delete files.

- Network Traffic Patterns: Analyzing network traffic for unusual patterns, such as high volumes of encrypted traffic to unknown destinations or connections to known malicious IP addresses, can be highly indicative of a zero-day attack. Unexpectedly large data transfers or unusual communication protocols are also strong indicators.

- Opcode N-grams: Analyzing sequences of opcodes (machine instructions) in malware samples can reveal patterns indicative of malicious behavior. Unusual sequences or frequencies of specific opcodes can signal the presence of previously unseen malware.

- API Call Frequency and Order: Similar to system calls, monitoring the frequency and order of API calls made by a program can identify malicious activity. Unusual patterns or high frequencies of specific API calls can be indicative of a zero-day attack. For example, an application suddenly making numerous calls to functions related to network communication might indicate a backdoor.

- Behavioral Features: These go beyond static analysis and focus on the dynamic behavior of a program. Examples include process creation patterns, memory allocation patterns, and file system access patterns. Unusual or unexpected behaviors can suggest malicious intent, even if the underlying code is obfuscated.

Challenges and Limitations of AI in Zero-Day Detection

AI-powered zero-day detection, while promising, faces significant hurdles. The inherent nature of zero-day exploits – their novelty and the lack of prior data – creates a complex challenge for even the most sophisticated AI systems. These limitations stem from data scarcity, inherent biases in training data, susceptibility to adversarial attacks, and the ever-present risk of false positives and negatives.

Limited Zero-Day Data for Training

Training robust AI models requires vast amounts of data, a resource notably absent in the zero-day threat landscape. Zero-day exploits, by definition, are unknown until they are deployed, making the collection of labeled training data extremely difficult and time-consuming. This data scarcity often leads to models that are undertrained and perform poorly when confronted with genuinely novel attacks. The lack of diverse examples of zero-day attacks hinders the AI’s ability to generalize and accurately identify unseen threats. For example, a model trained primarily on one type of malware might fail to detect a completely different type of zero-day attack, even if the underlying techniques are similar. This necessitates innovative approaches to data augmentation and synthetic data generation to improve model robustness.

Biases in AI Models and Their Impact on Detection Accuracy

AI models are only as good as the data they are trained on. If the training data reflects existing biases, the resulting model will likely inherit and amplify those biases, leading to skewed detection accuracy. For instance, if the training dataset primarily consists of attacks targeting specific operating systems or software versions, the model might exhibit lower detection rates for attacks targeting less-represented systems. This bias can lead to blind spots in the detection system, leaving certain types of zero-day exploits undetected. Addressing this requires careful curation of training datasets to ensure representation of diverse attack vectors and target environments. Regular audits of model performance across different data subsets can help identify and mitigate these biases.

Adversarial Attacks and Evasion of AI-Based Detection Systems

Sophisticated attackers are aware of the increasing reliance on AI for security, and they actively seek ways to circumvent these systems. Adversarial attacks involve crafting malicious code that is specifically designed to evade AI-based detection. These attacks often exploit subtle vulnerabilities in the AI model’s decision-making process, effectively camouflaging malicious code to appear benign. For example, an attacker might slightly modify a piece of malware to alter its feature vector in a way that deceives the AI model without significantly changing its functionality. This requires the development of more robust and resilient AI models capable of detecting even subtly altered malicious code. Techniques like adversarial training, which involves exposing the model to adversarial examples during training, can enhance its resilience.

Mitigating False Positives and False Negatives

The risk of false positives (flagging benign activity as malicious) and false negatives (missing malicious activity) is inherent in any detection system, and AI-powered systems are no exception. False positives can lead to disruptions in normal operations and user frustration, while false negatives can have severe security consequences. Mitigating these risks requires a multi-faceted approach. This includes rigorous model validation and testing using diverse datasets, incorporating multiple detection methods (e.g., combining AI with signature-based detection), and implementing human-in-the-loop verification to review flagged events. Employing anomaly detection techniques in conjunction with AI models can help identify unusual patterns that might indicate a zero-day attack, even in the absence of sufficient training data for specific exploits. Furthermore, continuous monitoring and model retraining are crucial to adapt to the evolving threat landscape.

Future Directions and Research Opportunities: Ai Powereds Detecting Zero Day

The field of AI-powered zero-day detection is rapidly evolving, presenting exciting opportunities for innovation and improvement. Current limitations highlight areas ripe for research, promising more robust and reliable systems in the future. Focusing on explainability, algorithmic advancements, and the potential of quantum computing can significantly enhance our ability to proactively defend against sophisticated cyber threats.

The integration of explainable AI (XAI) is crucial for building trust and understanding in these complex systems. Without transparency, it’s difficult to validate the accuracy of AI-driven threat assessments and to pinpoint the reasons behind specific detections. This lack of transparency can hinder adoption and limit the effectiveness of these systems. Advancements in XAI will allow security professionals to better understand the reasoning behind AI’s decisions, ultimately leading to improved confidence and more effective responses to potential threats.

Explainable AI for Enhanced Transparency and Trust

Explainable AI (XAI) techniques, such as LIME (Local Interpretable Model-agnostic Explanations) and SHAP (SHapley Additive exPlanations), can provide insights into the decision-making process of AI-powered zero-day detection systems. By highlighting the key features that contribute to a detection, XAI can help security analysts assess the validity of the alert and understand the underlying threat. For example, if an AI flags a particular file as malicious, XAI could reveal the specific code patterns or behavioral characteristics that triggered the alert, facilitating a more informed and efficient response. This increased transparency fosters trust and encourages wider adoption of AI-driven security solutions.

Advancements in AI Algorithms and Data Sources

Improvements in AI algorithms and access to richer datasets are key to further enhancing zero-day detection capabilities. The development of more sophisticated deep learning models, such as advanced recurrent neural networks (RNNs) and graph neural networks (GNNs), can improve the detection of subtle and complex attack patterns. Moreover, incorporating diverse data sources, including network traffic logs, system calls, and even unstructured data like emails and documents, can provide a more holistic view of potential threats. For instance, combining network flow analysis with natural language processing of phishing emails could improve the detection of sophisticated social engineering attacks. This multi-faceted approach to data collection will create more comprehensive and accurate threat models.

Research Plan: Improving Model Robustness Against Adversarial Attacks

A focused research plan could investigate improving the robustness of AI-powered zero-day detection models against adversarial attacks. This plan would involve: (1) developing a benchmark dataset of adversarial examples designed to evade current detection models; (2) evaluating the performance of various state-of-the-art AI models against this dataset; (3) exploring defensive techniques, such as adversarial training and data augmentation, to improve model robustness; and (4) developing metrics to quantify the effectiveness of these defensive techniques. The success of this research would significantly enhance the resilience of AI-powered security systems against sophisticated attacks. For example, the research could focus on creating robust models that can detect malware samples even after they’ve been subtly modified to bypass detection mechanisms.

Impact of Quantum Computing on Zero-Day Detection

The advent of quantum computing presents both challenges and opportunities for zero-day detection. While quantum computers could potentially break current encryption algorithms, creating vulnerabilities that need to be addressed, they also offer the potential for developing exponentially faster algorithms for pattern recognition and anomaly detection. This increased computational power could enable the development of AI models capable of analyzing vastly larger datasets and identifying extremely subtle attack patterns far more efficiently than current classical algorithms. For example, quantum machine learning algorithms could be used to identify complex relationships between seemingly disparate data points, enabling the proactive detection of sophisticated, multi-stage attacks currently beyond the capabilities of classical systems. However, research is still needed to fully understand and harness this potential.

Case Studies and Examples

AI’s application in zero-day vulnerability detection is still relatively nascent, but several promising examples highlight its potential. While many instances remain confidential due to security concerns, we can examine publicly available information and extrapolate potential applications to illustrate the power of AI in this field. This section will explore both real-world examples and hypothetical scenarios, focusing on the technical details and methodologies involved.

Real-world examples of AI detecting zero-day vulnerabilities are often shrouded in secrecy for obvious reasons. Companies that successfully utilize AI for this purpose rarely publicize the specifics of their methods to avoid tipping off potential attackers. However, we can infer from publicly available information about AI’s role in threat detection and incident response. Many cybersecurity firms leverage machine learning algorithms to analyze network traffic, system logs, and other data sources for anomalies that could indicate malicious activity. These anomalies, often subtle and previously unseen, could be indicative of a zero-day exploit. The AI models learn to identify patterns associated with known attacks, and deviations from these patterns trigger alerts for potential zero-day vulnerabilities. The specifics of the algorithms (e.g., type of neural network, feature engineering techniques) are generally proprietary information.

AI-Powered Zero-Day Detection in Web Servers

This example illustrates how AI could be deployed to detect a zero-day vulnerability targeting a web server.

The process involves several key steps:

- Data Collection: Gather diverse data streams from the web server, including system logs (access logs, error logs, security logs), network traffic data (packets, flows), and potentially even application-specific logs.

- Feature Engineering: Extract relevant features from the raw data. This might include things like request patterns, unusual HTTP headers, anomalous file access attempts, or unexpected system calls. These features should be designed to capture deviations from normal server behavior.

- Model Training: Train a machine learning model (e.g., a recurrent neural network or a support vector machine) using labeled data representing known attacks and normal server activity. This training phase is crucial for the AI to learn to distinguish between benign and malicious activity.

- Anomaly Detection: Deploy the trained model to monitor the web server in real-time. The model analyzes incoming data streams and flags any significant deviations from the learned patterns as potential zero-day attacks. This could involve setting thresholds for anomaly scores, generating alerts when those thresholds are exceeded.

- Alerting and Response: When an anomaly is detected, the system generates an alert, notifying security personnel. This allows for a rapid response, potentially containing the attack before significant damage occurs.

Visual Representation of AI-Powered Zero-Day Detection

Imagine a flowchart. The first box is labeled “Data Ingestion” and shows various data streams (system logs, network traffic, etc.) flowing into a central processing unit. The next box is “Feature Extraction,” where the raw data is transformed into numerical features. The third box is “AI Model (e.g., Neural Network),” showing a complex network processing the features. The fourth box is “Anomaly Score,” displaying a numerical score representing the likelihood of a zero-day attack. The final box is “Alert Generation,” indicating an alert triggered if the anomaly score exceeds a predefined threshold. Arrows connect each box, illustrating the flow of data and information.

Final Thoughts

Source: captex.bank

The future of cybersecurity is undeniably intertwined with artificial intelligence. AI-powered zero-day detection isn’t just a futuristic concept; it’s a rapidly evolving reality. While challenges remain – data limitations, adversarial attacks, and the need for explainable AI – the potential benefits are too significant to ignore. As AI algorithms become more sophisticated and access to relevant data increases, we can expect even more robust and effective zero-day detection systems. The ongoing arms race between attackers and defenders is now powered by algorithms, and that’s a fight we can actually win.